Difference between revisions of "SVM"

| Line 15: | Line 15: | ||

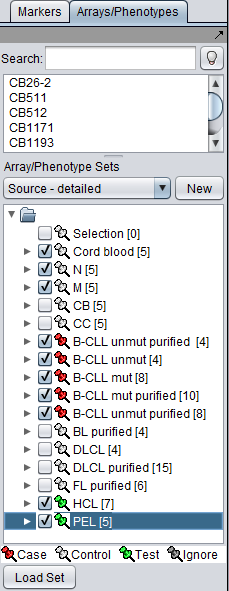

| + | [[Image:SVM_Arrays_Setup.png]] | ||

| + | |||

| + | |||

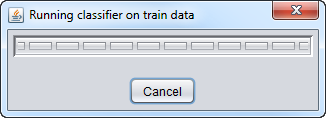

| + | [[Image:SVM_Running_Classifier_train.png]] | ||

| + | |||

| + | |||

| + | |||

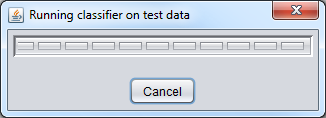

| + | [[Image:SVM_Running_Classifier_test.png]] | ||

| + | |||

| + | |||

| + | |||

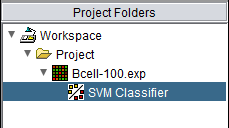

| + | [[Image:SVM_Project_Folder.png]] | ||

| + | |||

| + | |||

| + | |||

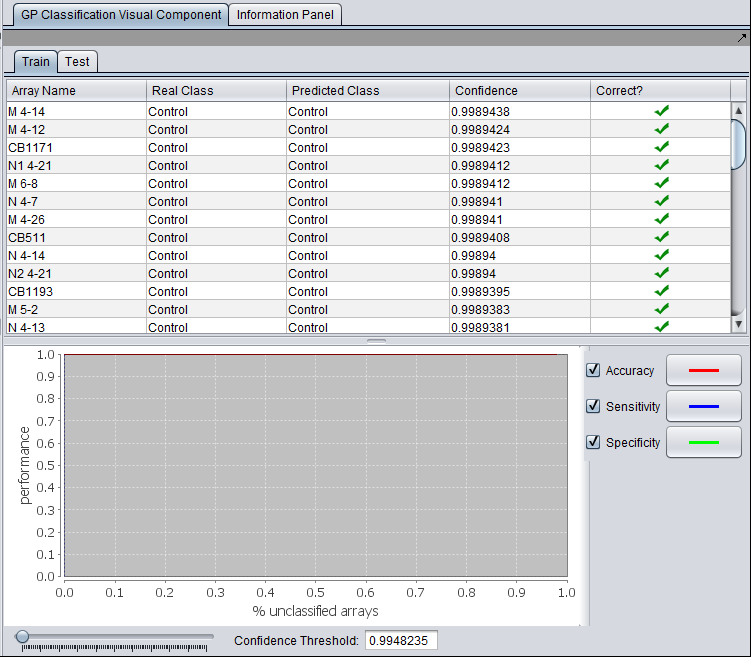

| + | [[Image:SVM_Train_Result.png]] | ||

| + | |||

| + | |||

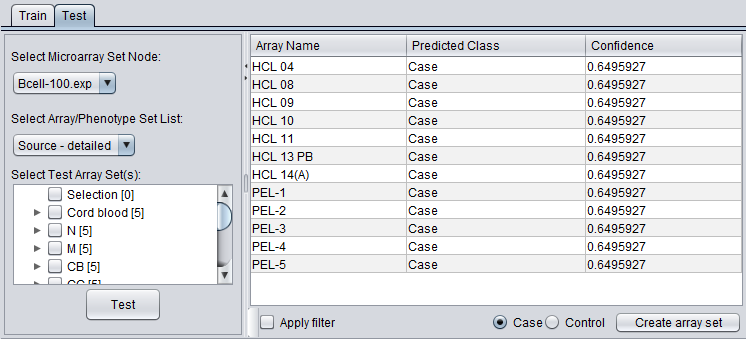

| + | [[Image:SVM_Test_Result.png]] | ||

Revision as of 18:36, 7 March 2011

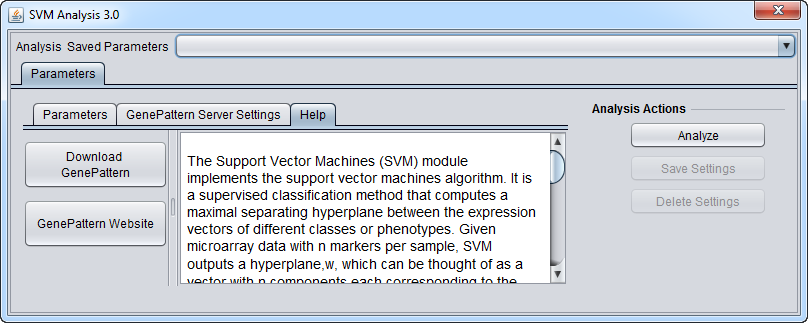

The Support Vector Machines (SVM) module implements the support vector machines algorithm. It is a supervised classification method that computes a maximal separating hyperplane between the expression vectors of different classes or phenotypes. Given microarray data with n markers per sample, SVM outputs a hyperplane,W, which can be thought of as a vector with n components each corresponding to the expression of a particular marker. Loosely speaking, assuming that the expression values of each marker have similar ranges, the absolute magnitude of each element in W determines its importance in classifying a sample.

References:

- R. Rifkin, S. Mukherjee, P. Tamayo, S. Ramaswamy, C-H Yeang, M. Angelo, M. Reich, T. Poggio, E.S. Lander, T.R. Golub, J.P. Mesirov, An Analytical Method for Multiclass Molecular Cancer Classification, SIAM Review, 45:4, (2003).

- T. Evgeniou, M. Pontil, T. Poggio, Regularization networks and support vector machines, Adv. Comput. Math., 13 (2000), pp. 1-50.

- V. Vapnik, Statistical Learning Theory, Wiley, New York, 1998.